Self-hosted Kubernetes

2024-09-05

At the moment of writing this article, the website you're reading it on is running on a self-hosted Kubernetes cluster. I also run a bunch of other services on top of k8s, namely an RSS reader, analytics software, etc.

I thought I'd share some technical insights.

Server setup costs

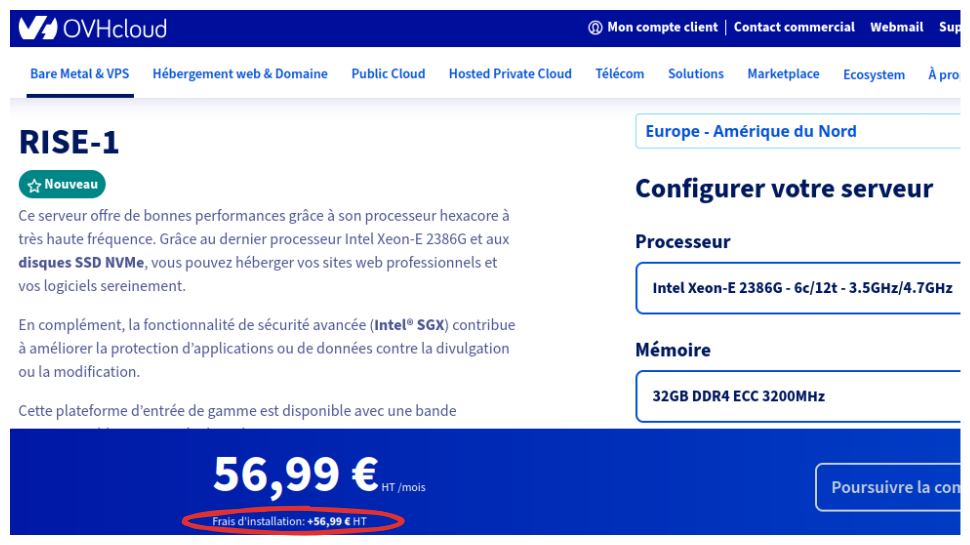

I host Kubernetes on top of dedicated servers from OVH. Depending on OVH's offering, there are sometimes setup fees.

That means I need to determine the kind of hardware I'll need in advance to avoid having to change hardware to frequently and incur additional setup costs every time.

I currently pay around 100€/month for a cluster with 3 worker nodes, a total of 96GiB RAM and 12 CPU cores and a separate control plane without high-availability.

I started with a cluster built from low-cost virtual private servers for about 25€/month total, but it turns out once I started instrumenting the cluster with monitoring and what-not, I need to upgrade to beefier servers.

Private networking

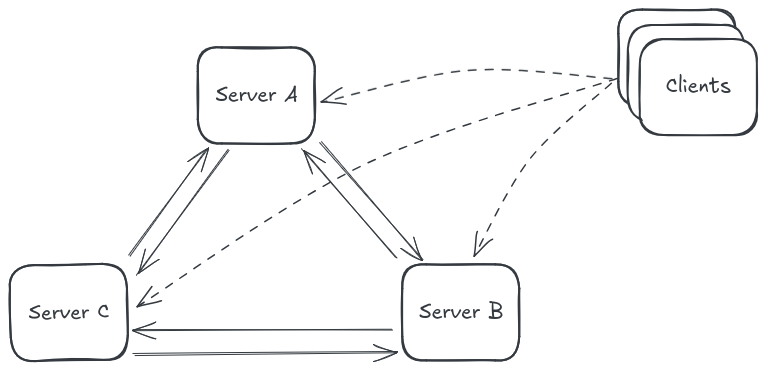

For private networking, I've opted for a vendor-independent solution, namely Wireguard. The goal is to be able to rent commodity hardware from any provider and connect the servers with a Wireguard mesh network to build my own virtual multi-region datacenter.

I have Wireguard keys for clients (laptop, smartphone). This allows me to access private services from anywhere. Key management is a bit of a pain though.

Because Wireguard runs over UDP and only respond to valid packets, from the outside world, there are no open ports at all apart from the public load balancer ports. Not even SSH.

Declarative firewall rules give me a form of ACL. Ansible is flexible enough that I can configure them almost the same way I'd configure AWS security group rules with Terraform.

Container networking

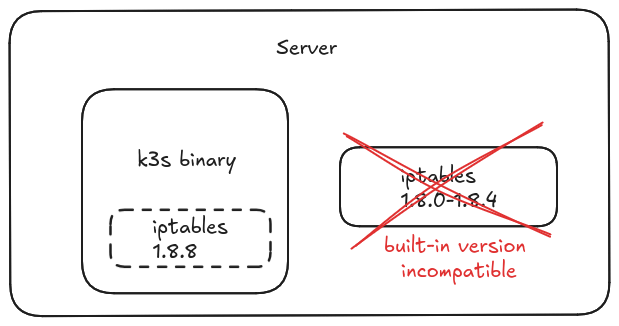

K3s, which I use here, sets up Flannel as a CNI. But because Flannel doesn't support network policies, k3s additionally ships with a network policy controller, which creates nftables rules on the fly.

Flannel works nicely with Wireguard by defining --flannel-iface wg0 on the k3s binary.

The nice thing with self-hosting is I can choose any CNI I want. I could move to Cilium should I need more advanced capabilities than what Flannel offers. Managed Kubernetes doesn't always support custom CNIs.

With dedicated servers, unless you physically separate the firewall from your worker nodes, you run the firewall rules on the worker nodes themselves, which requires careful testing because k3s' network policy controller currently is incompatible with some versions of iptables.

In addition, given I manage firewall rules with Ansible, I need to run a playbook to understand if everything is up to date. I have previously worked with AWS Security Groups and Network ACLS, using Terraform: I found that to be much less error-prone than Ansible plus nftables.

Operating system

Another aspect that requires setup and maintenance is the operating system. With cloud providers, Kubernetes workers are managed by the provider. They are opaque, but they "just work" and you can trust they'll have hardened their configuration.

For this website's cluster, I run Ansible playbooks to install Kubernetes, update packages, configure DNS, setup firewall rules and more on top of Linux.

Because my dedicated servers are long-lived, they can accumulate waste. Waste which I need to occasionally clean up. I have cron jobs which regularly cleanup things like old Docker images which have been pulled by the kubelet but are no longer used by any pods.

Public and private key infrastructure

Kubernetes services talk to each other using mutual TLS. To avoid disruption due to expired certificates, you may want to monitor expiry dates and setup alerts, especially in production environments.

This website is deployed on k3s, which automatically renews certificates as they expire. That's one less thing to worry about.

However, the PKI documentation page length from mainline Kubernetes is a good indication of how complex this topic can be.

In addition to Kubernetes' internal PKI, I use cert-manager to create certificates on the fly for new ingress resources. On that front, the complexity seems to be the same as when using managed Kubernetes. cert-manager is just that good!

Block storage

Block storage is the part of my cluster that gets my heartbeat up the most when I need to tweak it.

It holds precious persistent data. Loosing it would mean trying to restore backups. I say "trying", because unless you spend enough time testing your backups, you never know if they work properly until you need them.

And even if they work, you better have done your backups offsite, because datacenters occasionally do get completely destroyed.

Back to storage though.

With Kubernetes, cloud providers, I create a PersistentVolumeClaim with the right storage class and it "just works" because there are whole teams of engineers handling complexity silently behind the scenes. I'm fascinated when reading about the effort AWS puts into the stability of their block storage!

With self-hosting, not only do I have little information about storage performance, but I also need to choose the right hardware offering. Do I need soft-raid or hard-raid? How much redundancy do I need? Do I want a setup with only SSDs or can I do with HDDs for some workloads? And if I have different drive types, how do I create persistent volume claims for them?

I've ended up using Longhorn. Longhorn is easy to set up and to upgrade, using its helm chart. However, things can go wrong. As I was checking the 1.7.0 release notes, I read there was a critical issue with Longhorn (to be fixed in 1.7.1), that prevented volumes mounting, requiring a restore from volume snapshots.

Overall, Longhorn is a fantastic piece of software though. We are lucky to have that! Kudos to the people who created it and maintain it.

Even though Longhorn mostly "just works", I need to do capacity planning for disk space. When I need to add more disk space, one of two things can happen:

- Best case, I can attach a new drive to my dedicated server and off I go.

- Worst case, I need to decommission a worker in favor of a larger one.

Lock-in

Using dedicated servers means I have very little lock-in to proprietary software. I depend on the company that I rent the servers from. But most of the other stuff is open source non-enterprise licensed software that I can deploy anywhere else should I need to.

This self-hosted cluster is also a good way to learn how to avoid lock-in when working with managed Kubernetes environments. Knowing pros and cons of self-hosted block-storage or self-hosted certificate manager helps understand pros and cons of managed alternatives.

Summary

I hope this article gives you some practical insights into day-to-day operations of self-hosted Kubernetes clusters. Although it is time-consuming, especially for the initial setup, it's definitely a fun challenge, an opportunity to avoid lock-in and a way to keep costs under control in some cases.