Sorting a large folder of images by year

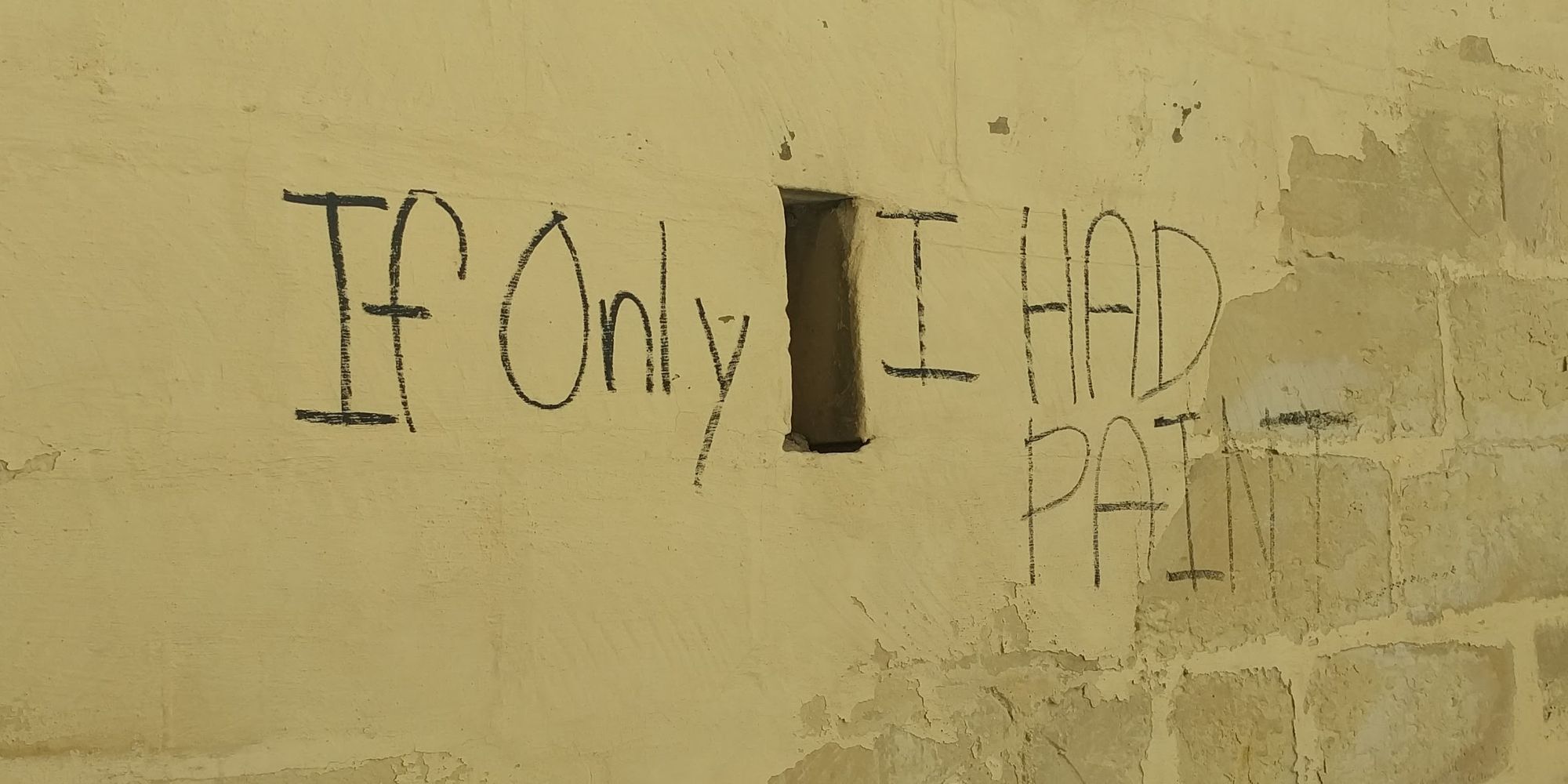

For years, I’ve been taking pictures of all kinds of things with my smartphone. The photo above, which is wall in Malta is just one example. Currently, I have all these pictures in a folder without any kind of sorting. It has become large enough that I have no idea what this folder contains. I’d like to sort these images.

Deleting duplicate images

Some images are duplicated. But how many really are? I use fdupes to find automatically remove duplicate files:

fdupes -rdN Pictures/

This removes images that are perfect duplicates. But what about almost identical images? What about if a PNG was duplicated as a JPEG? I look for software helping me with that and find Geeqie. This tutorial explains how I can use Geekie to quickly find similar images. With that, I remove a few dozen more duplicate images.

Sorting images

For me, sorting images by year is sufficient. I don’t need to know where the images were taken or what they are about. If it’s important enough, I’ll remember anyway. So I try to sort them by year. The images’ Exif data is helpful for that. Given a directory of images (only images, no folders), this script creates a directory that is named after the year at which each photo was taken and then moves the photo inside the created directory.

#!/bin/bash

set -e

find Pictures/ -maxdepth 1 -type f -print0 | while IFS= read -r -d '' file

do

# Bypasses any non-image files

file "$file" | grep image > /dev/null

if [ "0" != "$?" ]; then

continue

fi

# Bypasses files that don't have Exif data

exif="$(identify -verbose "$file" | grep "exif:")"

if [ 0 != "$?" ]; then

continue

fi

exif_year="$(echo "$exif" | grep exif:DateTimeOriginal | cut -d' ' -f6 | cut -d':' -f1)"

if [ -n "$exif_year" ]; then

echo "$file" '->' "$exif_year"

test -d "$exif_year" || mkdir "$exif_year"

mv "$file" "$exif_year"/

fi

done

For instance, if there is one image from 2005 and one from 2018, it creates this directory structure:

2005

-> one-image-from-2005.jpg

2018

-> another-image-from-2018.jpg

A few photos do not have any Exif data, so I sort these by hand.

Creating value from useless images

After sorting those images, I realize a lot of them have no value for me personally. They don’t represent anything personal, public places, airports, etc. I briefly think about deleting them. But what if, instead, I put my “useless” images on a stock photo website?

These images may not be useful to me right now, but they may well be useful to someone else. Or maybe they’ll be useful to me in a few years and having a personal bank of images I can pick from would be cool.

So I try to understand how to remove private information from these images. They can contain Exif data, which sometimes contains private information. To avoid leaking it, I’m strip it all away:

find Pictures/ -type f -exec exiv2 rm {} \;

Now, if I want some photos to describe me as the photographer, I can include a copy right notice:

exiv2 -M"set Exif.Image.Copyright Copyright Conrad Kleinespel" copyrighted-photo.jpg

I also want to strip any potentially private information from the image names themselves. And the extensions of the images being somewhat odd — jpg, JPG, jpeg, JPEG — I’d like to replace it all with jpg. So I create another script for that:

#!/bin/bash

set -e

find Pictures/ -type f -print0 | while IFS= read -r -d '' file

do

# Bypasses any non-image files

file "$file" | grep image > /dev/null

if [ "0" != "$?" ]; then

continue

fi

sha1="`sha1sum "$file" | cut -d' ' -f1`"

ext="`echo "$file" | grep -o '.[[:alnum:]]*$' | tr '[:upper:]' '[:lower:]'`"

if [ "$ext" = ".jpeg" ]; then

ext=".jpg"

fi

new_name="$sha1$ext"

mv "$file" "$new_name"

done

This creates image names like 3914678325556c51762c3c709c322d4357b2163d.jpg, without any personally identifiable information.